TotalCapture is a real-time, full-body mocap system that uses standard video cameras, along with inertial measurement units typically found in mobile phones. The new system requires no optical markers or specialised infrared cameras and can be used indoors or out, giving filmmakers and video-game artists unprecedented flexibility not found in existing mocap technologies.

This motion capture technology could transform creative industries.The new technology was shown at the European Conference for Visual Media Production (CVMP), which took place on 11 and 12 December, in the British Film Institute in London.

Total Capture: 3D Human Pose Estimation Fusing Video and Inertial Sensors

Matthew Trumble, Andrew Gilbert, Charles Malleson, Adrian Hilton, and John Collomosse, Centre for Vision, Speech & Signal Processing University of Surrey, United Kingdomappeared at British Machine Vision Conference, BMVC 2017 (ORAL)

Professor Adrian Hilton, Director of Centre for Vision, Speech and Signal Processing (CVSSP) at the University of Surrey, said: "We are very excited about this new approach to capturing live, full body performances outdoors. What we demonstrated at this year's conference is something that could open new possibilities for artists who use motion capture technology to deliver immersive experiences.

"It will give film makers the freedom to imagine a world where they are not restricted to a sound stage with expensive cameras to accurately capture a performance for a video game or CGI character in a film. With this technology, it is easy to capture the performance of a subject in a variety of locations using a simple and low-cost set up."

Dr Charles Malleson, Lead Researcher for TotalCapture at CVSSP, says: "Motion capture technology has developed massively over the past decade, improving the final image we see on screen immeasurably over what we saw even five or ten years ago. But when it comes to improvements, the focus has remained on the final image and the technology to capture it has remained costly and inflexible. Our system has modest hardware requirements, produces a quality image and can be used in outdoor or indoor environments. It will bring the power and freedom of motion capture to film makers at home as well as within the film and games industry."

Abstract

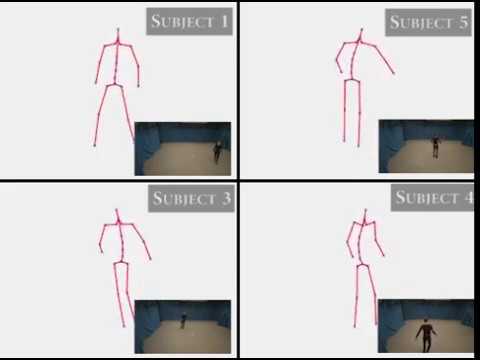

We present an algorithm for fusing multi-viewpoint video (MVV) with inertial measurement unit (IMU) sensor data to accurately estimate 3D human pose. A 3-D convolutional neural network is used to learn a pose embedding from volumetric probabilistic visual hull data (PVH) derived from the MVV frames. We incorporate this model within a dual stream network integrating pose embeddings derived from MVV and a forward kinematic solve of the IMU data. A temporal model (LSTM) is incorporated within both streams prior to their fusion. Hybrid pose inference using these two complementary data sources is shown to resolve ambiguities within each sensor modality, yielding improved accuracy over prior methods. A further contribution of this work is a new hybrid MVV dataset (TotalCapture) comprising video, IMU and a skeletal joint ground truth derived from a commercial motion capture system. The dataset is available online at http://cvssp.org/data/totalcapture/

The network overview of our approach, Our two-stream network fuses IMU data with volumetric (PVH) data derived from multiple viewpoint video (MVV) to learn an embedding for 3-D joint locations (human pose).

Paper

Total Capture: 3D Human Pose Estimation Fusing Video and Inertial Sensors

Matthew Trumble, Andrew Gilbert, Charles Malleson, Adrian Hilton, and John Collomosse,

BMVC 2017

Matthew Trumble, Andrew Gilbert, Charles Malleson, Adrian Hilton, and John Collomosse,

BMVC 2017

Get the TotalCapture Dataset

Credits : University of Surrey; The Centre for Vision, Speech and Signal Processing (CVSSP)

No comments:

Post a Comment